Metaverse – a technological evolution

Understanding the metaverse as a technological evolution

Metaverse is the most talked, understood and misunderstood topic these days. In most of my sessions people ask what is the technology stack for Metaverse? what should I learn to stay relevant? etc.. I will try to cover it in this article. In my earlier article I tried to define the Metaverse, and my friend Vinodh Arumugam also attempted the same here. It is defined so loosely that every other industry today is trying to define it in their own way to suits their narrative. It would end up creating many sub-metaverses, with different set of features and, would lead to many confusions, and conflict of interests. Any how this will evolve with time.

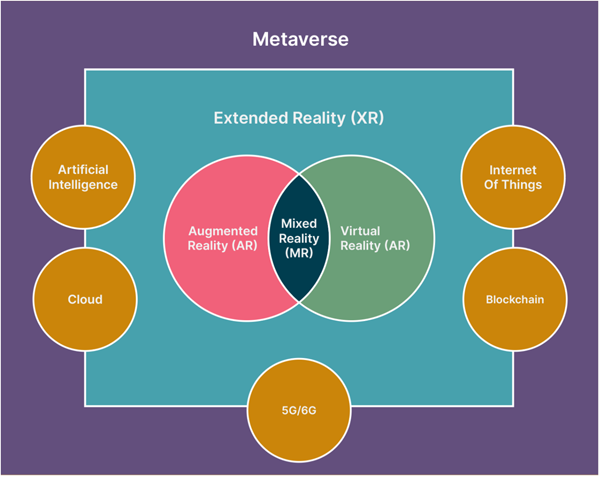

Current stage of metaverse is like “a solution waiting for the problems to happen”, there are lot of promises and claims without real acceptable usages. This situation is changing soon with evolution of technology and businesses, and more acceptance to metaverse are in making. Acceptable definition of Metaverse will also evolve with time. We are still far from the true metaverse, and today metaverse hype is just the evolution of technology and it is a set of technology pieces coming together, it may continue like that. Here are the building blocks of Metaverse. Evolution within these building blocks driving the metaverse adoption.

Current stage of metaverse is like “a solution waiting for the problems to happen”, there are lot of promises and claims without real acceptable usages. This situation is changing soon with evolution of technology and businesses, and more acceptance to metaverse are in making. Acceptable definition of Metaverse will also evolve with time. We are still far from the true metaverse, and today metaverse hype is just the evolution of technology and it is a set of technology pieces coming together, it may continue like that. Here are the building blocks of Metaverse. Evolution within these building blocks driving the metaverse adoption.

eXtended Reality (XR)

Evolution of user experience and interactions is the key driver for XR adaption. Now access to a camera and internet is a very basic thing the users, norms are changing, and people are accepting to be live on camera, and sharing more with the world. This is brining many metaversal use-cases in life. There has been lot of advancement in ARVR devices in recent years, that is making the new user experience possible.

Head Mounted Displays to Normal looking Smart Glass — Earlier bulky ARVR head mounted devices are turning into light weight and normal looking smart glasses with better capability than ever. Eg. Lenovo A3, Nreal and 250+ devices in line. Not just that it is now getting into as small as contract lenses.

Mobile phones are evolving as wearable computing unit to power the smart glasses/other wearables. Most head mounted devices today are tethered from PC or mobile phones, or some android based computing units. This enabled bringing traditional developed practices to be reused and improvised for XR development.

Mobile XR — Mobile phones itself is capable of running ARVR application without a need of smart glass. Google ARCore and Apple ARKit has made it possible for mobile phone developer to build XR application for Mobile phones. Unity 3D Engine, and Unity AR Foundation has scaled it the next level along with other 3D engines like unreal, vuforia and more. Smart phones are working to include advanced capabilities like depth sensing, and semantic scene understanding out of the box.

WebXR — Web based XR technology is bring XR experience directly on the web browser. There are many player making it possible with traditional WebGL, or using favourite javascript libraries ThreeJS, BabylonJS or likes of 8th Wall.

OpenXR — an open initiative for bringing multiple devices, maker, and communities closure to the characteristics of the Metaverse. OpenXR is being adapted by Qualcomm Snapdragon XR platform, and Qualcomm is fuelling huge in metaverse. More and more XR devices (Lenovo A3, NReal, and more) will be using XR2/XR2 chipset from Qualcomm. SDK’s from Meta and Microsoft Hololens has also support OpenXR.

Volumetric Displays or projectors are also growing and bringing XR experience with wearing any display devices.

Smart Mirrors with camera are coming to track the person moment and show contextual information right in front of user. Heads up displays are also traditionally available as Augmented Reality usecases.

Other Peripheral devices like hectic gloves, trackpads, keyboards, are all growing and evolving.

Artificial Intelligence (AI)

As we talked about in last section, user interaction is evolving faster, the XR devices or development kit are are coming with out of the box artificially intelligent capability to understand the environments semantically, as follows

Identity detection — XR capable devices as now capable identify humans by detecting the face, iris, skin texture, finger print etc. Authentication and Authorisation metaverse will be a key think to safe guard identify where people will have multiple metaversal identifies. These capabilities are important for metaversal development.

Image and Object Recognition — Scanning the scene and understanding is semantically is now important part to share the environment in metaverse or creating the digital twin of it. Devices and SDKs are coming with built in Image and Object recognition, and plane detection capability. Tracking the detected objects naturally is the important part where they get occluded with other real or virtual objects out there.

Gesture detection — XR interactions have been evolved now, users can use their hands naturally with different hand gestures. They don’t need to use physical controllers. Use can also control the XR apps with eye tracking, or facial expression too. Not just that, now XR devices are evolving to detect full body posture detection, and can track it well. The applications can react to full body movement. You might have seen a metaversal conference call where avatars are with only upper half of the body, but it will be changing soon, as the AI now head worn devices can also predict movement bottom half of the body. Ref here.

Voice recognition — Avatars or digital twins of us are replacing us in metaverse, and they need to replicate/animate our voice, lip movement, and more. Voice recognition is at the core of it, and not just that the XR devices now support voice based interactions to control the XR applications.

Spatial tracking — Realtime tracking of device with respect to the environment is a basic need for XR application, and it is called Simultaneous Localisation And Mapping (SLAM). Most devices are now capable of tracking in 6DOF (Degree of Freedom).

Spatial Sound — Sound is very important for spatial application, and it need to reflect naturally based on the environment. Read more details here, and hear it out below;

Image/Video Processing — Live video processing and deep learning, and sensing the environment is becoming useful tech for metaverse usecases. See here RealityScan, and NVIDIA Instant NeRF.

NVidia Instant NeRF

Brain Computer Interface (BCI) — is the next big bet in evolving interactions, controlling metaverse just by thoughts. See some development, Snap acquired NextMind, Galea from OpenBCI, or emotiv

Cloud Technologies

Cloud technologies has also evolved with time, and it has been acting as a catalyst for scaling emerging tech. There has always been a battle of private/public/hybrid cloud and the balancing game between these. Infra management and maintenance is always a key cost consideration in digital modernisation.

While XR devices are getting more powerful, and capable but that is at the cost like Lower battery life, bigger form factor, overheating, more wiring etc. This limit them for longer usage. Cloud based XR, is looked as scale solution for XR, following evolutions are helping the adaption

Cloud Rendering — Rendering XR content put huge load on XR devices and currently not all XR content can be rendered in these devices. Lot of effort is being put in to optimising the XR content for different devices. This restricts the use of photorealistic content in XR, mean sub-optimal experience. XR is all about believing in the Virtual content just like it is real, and this breaks the experience. Device SDK can provide an automatic way to switch between local vs cloud rendering (near or far cloud). Read here Azure Remote Rendering and NVIDIA CloudXR

Cloud Anchors — Another key cloud requirement is mapping the world. Finding the feature points in the environment and storing those feature point on cloud. This is called Cloud Anchors. Read here about google cloud anchors and Spatial Anchors from Microsoft Azure.

Other general cloud usage continue here too for realtime data transfer using WebTRC/Websocket and storing the data on cloud. or integrating with other SAAS and managing the XR devices with MDM solutions.

Internet Of Things (IoT)

XR devices are already acting as hub of IoT eco-system, that has number connected sensors, has internet, and can do some level of local computing and can well talk to connected cloud services. So the XR devices are more evolved IoT devices in one sense. They can well connect to other IoT devices over the different IoT protocols (BLE, Zigbee, IR etc) to understand the environment even better.

In the industrial context, connected eco-system can bring more fruitful results. For example, bring contextual information about a machine when user is in its proximity and machine can be accurately identified by merging multiple identification methods like image/object recognition and IR/proximity data.

Not just that, IoT eco-system can well handshake XR devices and process or read data from it and behave accordingly. In nutshell XR devices can become ear and eyes for the traditional devices, and the Enterprise Metaverse is all aiming for it, to bring humans and machine/robots closer, let them work together.

Blockchain

Metaverse is evolving in multiple directions, sustainability is one of the characteristic of metaverse. Content creation and making it available to public consumption is important part of metaverse survival, and adaptation to general use. Currently there is no standard way to distribute or monetise on digital content. You might say why am I discussing this here in blockchain section, how is blockchain linked to metaverse?

One reason is NFT which is an evolution of blockchain technology, that claims to boost content creation economy and where creators can monetise on their asset. I am not very sure how long this will fly, but currently it is really hyped. Number of NFT based market places booming in this area for example Cryptopunks, Hashmasks, SuperRare, Rarible, Sandbox, NFTically, WazirX.

Another reason is, another characteristic of metaverse that talks metaverse not being controlled/owned centrally by one, rather it should be driven from communities/people. This lead to de-centralised architecture for metaverse solutions and Blackchain distributed app architecture comes closure to it. So it’s more of “de-centralisation” that is related to metaverse than the blockchain. De-centralisation in form of near remote computing is also a factor here. Decentraland is building marketplace on blockchain tech, giants like samsung are also adopting it.

The traditional payment methods are evolving to include NFT/Crypto based payment mode, and whole new era of advertisement business for virtual real-state coming in to play. Current stage of blockchain based architecture may not suffice speed the metaverse need, but more people use it, more it will evolve naturally. There is always a tussle between forces for centralisation (government, enterprises, authorities, auditors) and decentralisation (community, people, open eco-system etc), and will continue like that.

nG Network

We are talking about huge data transfer, the network speed has to be really high to meet the metaverse need of real time environment sharing, and live collaborations. Evolution to 5G/6G will bring more metaverse adoption. It’s all about experience, and if immersion breaks it would not look real. It has to be close to real world. There would be no storage in device, as the devices will go very light-eight wearables, all can be processed remotely and transferred via the super fast network.

Telecom provider also evolving, see MEC computing with 5G by Verizon.

XR devices also need to go wireless, and solution like LiFi are evolving to catch the game. Even charging to these devices evolving with wireless charger. 5G rollout in trial, I hope it will get faster for enterprise use-cases first and then it will to get hand of consumer. So enterprise metaverse will take 5G/6G advantage faster than that of consumer side, enterprise can have controlled high speed network.

Satellites internet is also evolving and claims to provide high speed, low latency network. other development happening on quantum network for safer internet.

Conclusion

Evolution is a natural process, and so is true for technology or businesses. Technology emerges and get tested, conceptualised for real use. There is always some loopholes, constraints and limitations with emerging tech or business, it goes in to multiple evolutions before it become ready for prime times. The same is true for the Metaverse, that is being driven from some set of technological evolutions. More it get explored, more it will evolve, and we hope to reach true stage of the metaverse in future.

Characteristics of Metaverse

- Metaverse is Open and Independent — Metaverse has to be open to use, open to build on top of it, and It must not be controlled by any one.

- Metaverse is One and Interoperable — Metaverse has to be one and only one, and interoperable and integratable across software and hardwares.

- Metaverse is for everyone and self-sustainable — Metaverse should be accessible for everyone, and has to be self-sustainable where people can buy and sell things and earn to survive there.

In nutshell, Metaverse is the next Internet — it is touted to be the next natural step in the evolution of the internet with use cases to boot.

About the Author

Kuldeep has built his career empowering businesses with the Tech@Core approach. He has incubated IoT and AR/VR Centres of Excellence with a strong focus on building development practices such as CICD, TDD, automation testing and XP around new technologies.

Kuldeep has developed innovative solutions that impact effectiveness and efficiency across domains, right from manufacturing to aviation, commodity trading and more. Kuldeep also invests time into evangelizing concepts like connected worker, installation assistant, remote expert, indoor positioning and digital twin, using smart glasses, IoT, blockchain and ARVR technologies within the CXO circles.

He has led several complex data projects in estimations, forecasting and optimization and has also designed highly scalable, cloud-native and microservices based architectures.

He is currently associated with ThoughtWorks, as a Principal Consultant, Engineering and Head of XR Practice, India. He has worked with Nagarro as a Director of Technology.

Kuldeep holds a B.Tech (Hons) in Computer Science and Engineering from National Institute of Technology, Kurukshetra. He also spends his time as a speaker, mentor, juror and guest lecturer at various technology events, and x-member of The VRAR Association. He is also mentor at social communities such as Dream Mentor, tealfeed.com and PeriFerry

He can be Contacted at

E-Mail : [email protected] / [email protected]

Linkedin : https://www.linkedin.com/in/ku

Twitter : https://twitter.com/thinkuldee

Facebook : https://www.facebook.com/kulde

Instagram : https://www.instagram.com/acco

Blog : https://medium.com/xrpractices

Personal Website : https://thinkuldeep.com/

Company Website : https://www.thoughtworks.com/p