Statistical AI : The Analytical Core of the Next-Generation AI Ecosystem

A White Paper on the Future Paradigm of Intelligent Systems, Tools, and Industry Transformation

Introduction:

The global AI landscape is shifting from deterministic pattern recognition toward a new intelligence frontier grounded in statistical reasoning, probabilistic inference, and causal understanding.

This transformation marks the rise of Statistical AI — a paradigm that blends the rigor of statistical science with the scalability of modern machine learning (ML). Statistical AI is not an alternative to AI; it is the foundation of its future.

By embedding inference, uncertainty quantification, and contextual learning at the core of algorithms, Statistical AI creates systems that are data-efficient, interpretable, and adaptive across domains — from financial modeling and healthcare analytics to industrial automation and energy systems.

This white paper outlines:

- the technical evolution driving Statistical AI,

- industry-grade tools and frameworks enabling it,

- sectoral applications, and

- a strategic vision for integrating Statistical AI as a national and enterprise capability.

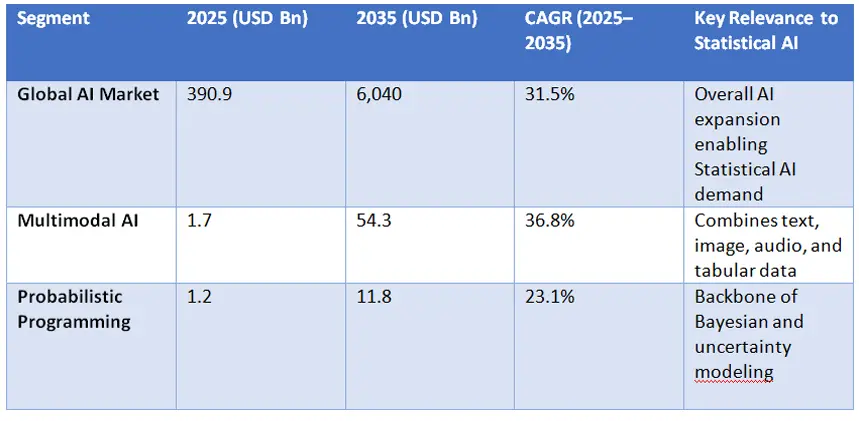

The Market Outlook: The Decade of Statistical AI:

The worldwide AI industry is expanding exponentially. According to Grand View Research, the global AI market will grow from USD 390.9 billion in 2025 to USD 3.5 trillion by 2033 — a CAGR of approximately 31.5%. Extending this projection through 2035 yields a potential market size of around USD 6 trillion.

Within this broader landscape, several sub-markets directly align with Statistical AI’s growth trajectory:

(Projections based on Grand View Research, MarketIntel, and extrapolated CAGR trends)

(Projections based on Grand View Research, MarketIntel, and extrapolated CAGR trends)

These growth dynamics reflect a structural transformation: the rise of Statistical AI as a differentiator in both predictive power and interpretability.

1. Why the AI Ecosystem Needs a Statistical Core

1.1 The Plateau of Pure Machine Learning

Over the past decade, deep learning has dominated AI progress. Yet leading research institutions and enterprises are observing saturation in accuracy gains — with models demanding exponential data and compute for marginal improvements.

According to Stanford’s AI Index 2025, model training costs have increased by over 250x between 2018 and 2024, while real-world generalization and interpretability have stagnated. The dependency on massive labelled datasets is becoming a bottleneck.

1.2 Statistical Intelligence: The Missing Engine

1.2 Statistical Intelligence: The Missing Engine

Statistical AI provides the structural discipline missing in current AI systems:

- Inference-driven learning instead of blind fitting.

- Uncertainty quantification that captures confidence and risk.

- Causal modeling that explains relationships, not just correlations.

- Probabilistic integration across multi-source, noisy, or missing data.

- Parameter efficiency and robustness to small or drifting datasets.

These principles restore the scientific foundation of AI — enabling systems that are adaptive, explainable, and mathematically consistent.

2. Defining Statistical AI

2.1 Conceptual Definition

Statistical AI is the integration of probabilistic and inferential principles into machine learning architectures, enabling AI systems to reason, quantify uncertainty, and generalize beyond observed data.

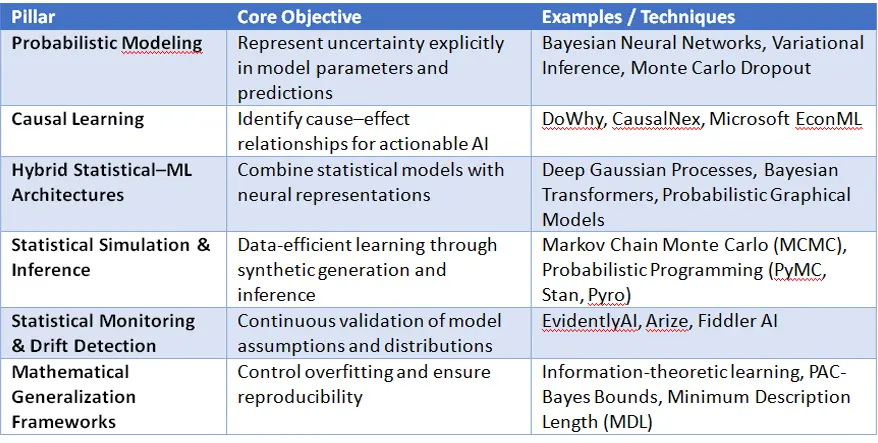

2.2 Technical Pillars

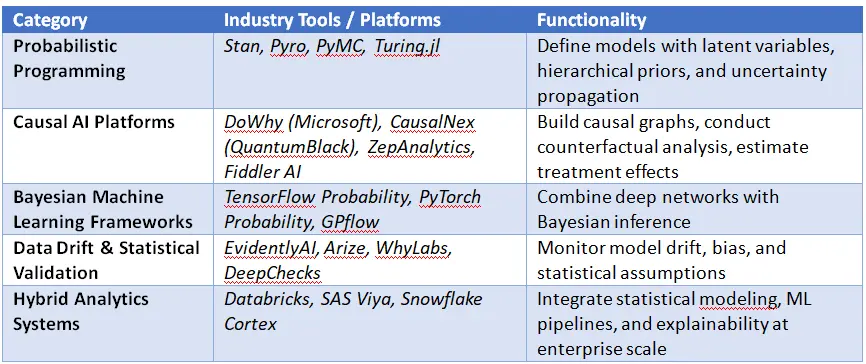

3. Tools and Technologies Powering Statistical AI

The ecosystem for Statistical AI is rapidly maturing, with several tools bridging statistics and AI pipelines:

These platforms signify a decisive shift: statistical inference and uncertainty quantification are becoming first-class citizens in AI engineering.

4. Cross-Industry Applications and Technical Impact

4.1 Financial Services

Problem: Traditional ML credit models overfit and lack causal interpretability.

Solution: Bayesian credit risk modeling with causal attribution and predictive intervals.

Tools: TensorFlow Probability + DoWhy + Fiddler AI for interpretability.

Outcome: Transparent, regulator-ready credit models with quantified confidence.

4.2 Healthcare and Life Sciences

Problem: Clinical data is heterogeneous, uncertain, and incomplete.

Solution: Probabilistic deep learning for diagnosis and treatment response modeling.

Example:DeepMind’s Bayesian medical imaging systems produce uncertainty maps for radiologists, improving diagnostic trust.

4.3 Manufacturing and Industry 4.0

Problem: Predictive maintenance under sensor noise and missing data.

Solution: Gaussian process models and Bayesian reinforcement learning for adaptive control.

Impact: Reduction in downtime through statistically confident maintenance alerts.

4.4 Energy, Climate, and Smart Infrastructure

Problem: High variability in renewable energy output and demand forecasting.

Solution: Hierarchical Bayesian time-series forecasting with hybrid ML models.

Toolkits:PyMC + Prophet + TensorFlow Probability for robust energy forecasting.

4.5 Retail, Supply Chain, and Logistics

Problem: Data sparsity and behavioral uncertainty in consumer patterns.

Solution: Hierarchical Bayesian demand models with dynamic priors and causal signals (marketing, events, seasonality).

Outcome: Inventory optimization and more stable recommendation systems.

5. Statistical AI in Enterprise Architecture

5.1 Integration Framework

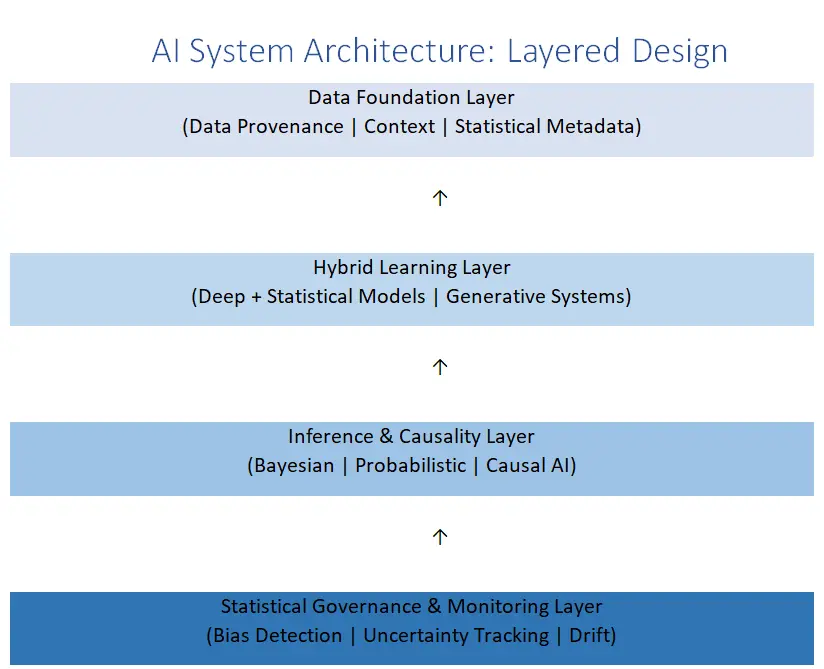

A future-ready AI ecosystem will adopt a Statistical AI stack, combining four layers:

- Data Foundation Layer – Metadata, lineage, and statistical data quality metrics.

- Inference & Causality Layer – Probabilistic programming and causal reasoning frameworks.

- Hybrid Learning Layer – Bayesian deep learning, Gaussian processes, and interpretable neural networks.

- Governance & Assurance Layer – Continuous model validation, drift detection, and statistical calibration.

5.2 Architecture Example

This layered design positions statistical reasoning not as a wrapper but as the analytical core of the AI system.

6. The Future Vision: Statistical AI as the Brain of Intelligent Systems

6.1 Fusion of Generative and Statistical Intelligence

The rise of Generative AI (e.g., GPT, Gemini) highlights a paradox: powerful pattern generation but uncertain reasoning. The next step is Statistical Generative AI — models that combine probabilistic reasoning, uncertainty tracking, and interpretability within generative architectures.

Emerging examples include:

- Bayesian Transformers: integrating uncertainty in large language models.

- Probabilistic Diffusion Models: quantifying sampling confidence in generative tasks.

- Causal Generative Models: learning data generation processes grounded in domain causality.

6.2 Predictive and Prescriptive Convergence

Statistical AI closes the loop between prediction and prescription:

- Predictive analytics quantifies what will happen.

- Statistical AI explains why and with what confidence.

- Prescriptive AI acts on decisions weighted by statistical certainty.

6.3 Towards Cognitive Statistical Systems

In the coming decade, AI systems will learn, reason, and decide like scientists — not just classifiers.

They will:

- Continuously learn priors from experience,

- Quantify and communicate uncertainty,

- Adapt decisions through statistical feedback, and

- Integrate symbolic reasoning with data-driven inference.

This evolution defines the AI 3.0 Era — where statistical intelligence becomes synonymous with artificial intelligence.

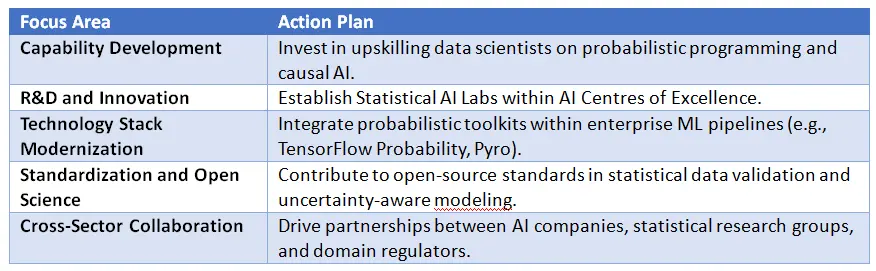

7. Strategic Roadmap for Enterprises and Governments

The decade from 2025 to 2035 will witness a transformation of how AI is built, governed, and deployed — powered by the principles of Statistical AI.

Key Predictions:

MultimodelAI Convergence: Enterprises will move toward systems that can integrate models across diverse modalities (text, vision, signal, tabular).

Uncertainty-Aware Decision Systems: Decision engines will explicitly consume model uncertainty for cost–risk optimization.

Statistical AI-as-a-Service (SAIaaS): Cloud platforms will offer probabilistic inference engines as managed services.

Democratized Causal Modeling: Causal and counterfactual reasoning will become standard in business analytics tools.

Hybrid Governance Models: Enterprises will adopt statistical validation layers to ensure AI reliability and calibration at scale.

8. Conclusion

The evolution of AI is entering a decisive phase — one that demands a return to mathematical intelligence.

Statistical AI is the unifying paradigm that bridges human reasoning with machine learning, enabling systems that can infer, quantify, and adapt. It is not the complement to AI — it is the very heart of its ecosystem.

From smart factories and autonomous systems to personalized healthcare and financial analytics, every domain that depends on prediction and decision will rely on Statistical AI as its cognitive core.

The future of AI is not just artificial — it is statistical.

About the Author:

Global Head – Statistical AI COE

Tata Consultancy Services (TCS)

Mr. Abhaya Kant Srivastava is a seasoned Risk Analytics senior leader in banking and financial sector with over 19 years of experience.

Mr. Abhaya Kant Srivastava is currently Global Head – Statistical AI with TCS where he leads the strategic initiative to acquire new projects in the area of Statistical, predictive and traditional AI, advise clients to deploy AI and ML solutions, develop assets and tools and support the engineering team.

Mr. Abhaya Kant Srivastava, is spearheading the Statistical and Predictive AI practice within the newly established Central AI Centre of Excellence (CoE), driving strategic AI initiatives across business groups and industry verticals. Collaborating with cross-functional AI leadership to deliver cutting-edge solutions, advisory services, and

capability development aimed at accelerating the growth and impact of TCS’s AI practice.

Mr. Abhaya Kant Srivastava, prior to Tata Consultancy Services (TCS), headed a big size team for India Risk Analytics and Data Services Practice at Northern Trust Corporation.

Before Northern Trust Corporation, Mr. Abhaya Kant Srivastava worked at KPMG Global Services, Genpact, EXL and startups like Essex Lake Group and Cognilytics Consulting to lead risk and analytics.

Mr. Abhaya Kant Srivastava is the Founder of “Risk Analytics Offshore Practice” for Northern Trust & Cognilytics.

Mr. Abhaya Kant Srivastava is an Expert in Building Analytics ODC.

Mr. Abhaya Kant Srivastava is a B.Sc. (Honours) in Statistics – Gold Medallist from Institute of Science – Banaras Hindu University, M.Sc. in Statistics from Indian Institute of Technology, Kanpur and currently doing executive Ph.D. in Statistics/Machine Learning from Indian Institute of Management, Lucknow.

Mr. Abhaya Kant Srivastava, also has a certification in “Artificial Intelligence for Senior Leaders ” from Indian Institute of Management, Bangalore.

Mr. Abhaya Kant Srivastava is Accorded with the following Honors & Awards :

https://www.linkedin.com/in/ab

Mr. Abhaya Kant Srivastava is Bestowed with the following Licences & Certifications :

https://www.linkedin.com/in/ab

Mr. Abhaya Kant Srivastava has Led the following Projects :

https://www.linkedin.com/in/ab

Mr. Abhaya Kant Srivastava can be contacted :

Also read Mr. Abhaya Kant Srivastava‘s earlier article: