Data Centre Cooling Techniques & Scope of CFD Modeling

Table of Contents

1. Introduction to Data Center

1.1. Working Principle of Data Center

1.2. Server room of Data Center

2. Types of Data Centre Cooling

2.1. Challenges and Considerations

2.2. Advanced Cooling Technologies

2.3. Chiller Water Cooling of Data Center

2.4. Alternate Cold and Hot Aisle Containment

2.5. Liquid Cooling Technologies

2.6. Immersion Cooling with Dielectric liquid

2.8. Which cooling method is more effective for the data center?

3. Design of Data Centre Cooling

4. Scope of CFD Modelings for Data Centre

5. Case Study 1: Data Center with Two Racks

6. Case Study 2: Temperature Distribution in Data Centre

Introduction to Data Center

• Data Centre is similar CPU of our PC or laptop. But its size is very big and huge data is saved in thousands of servers or memory units. Google data Centre is spread over large land. Fans are placed outside the plant.

• They operate all 24 x7 hours and consume a lot of electricity for maintenance

• Cooling of the Data Centre is necessary, otherwise, the whole data Centre would shut down.

• The cooling system conjures a large portion of power.

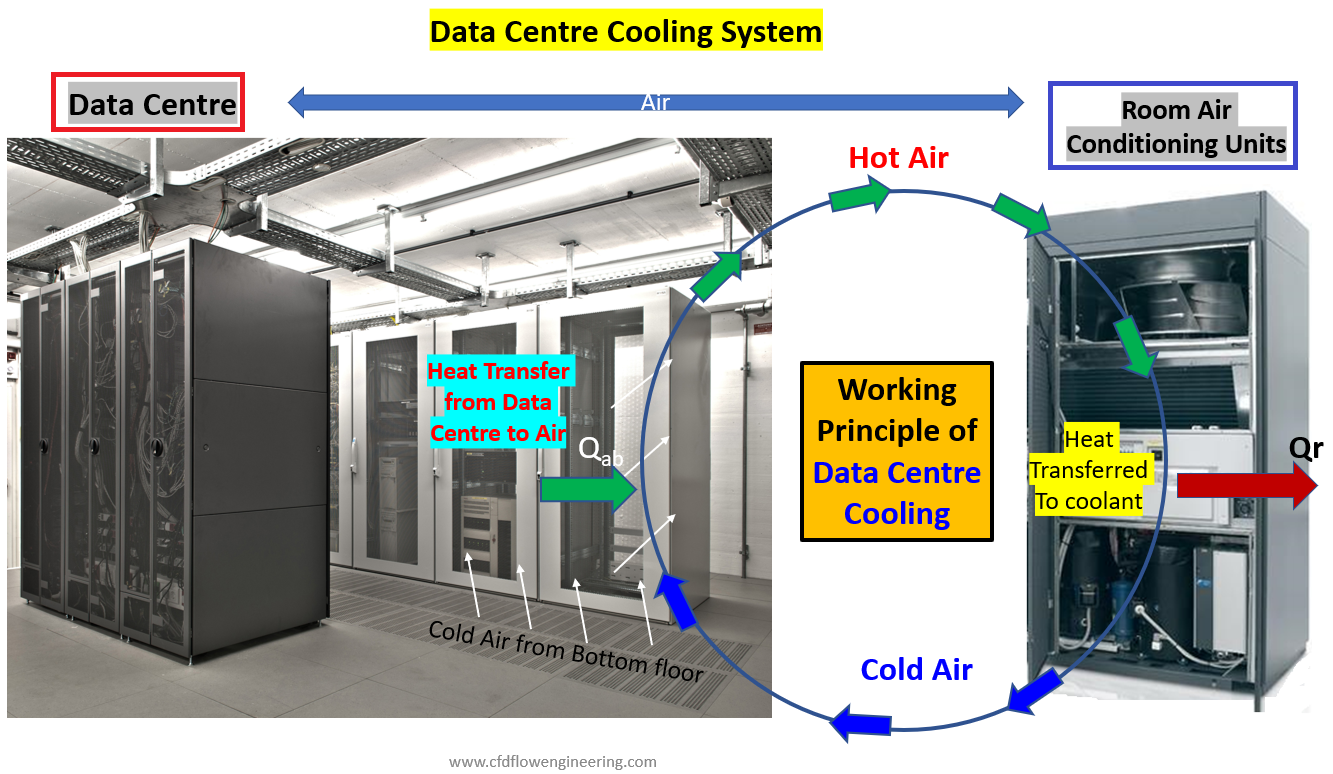

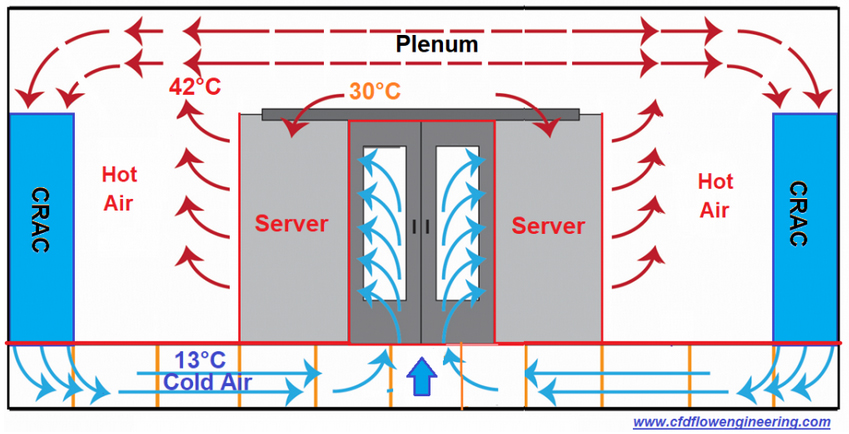

Working Principle of Data Center

• Data center cooling is carried out air conditioning unit in the server room. Cold air is allowed to flow through the heated data Centre.

• The heated air is sucked in by the AC unit

• Using a heat exchanger, the coolant absorbs heat from hot air in AC units. After that air temperature goes down

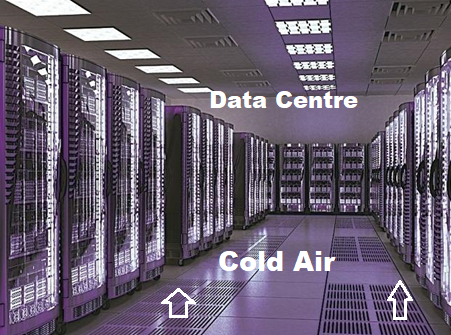

• The cold air forced through from the bottom cold passage to the middle chamber between server racks. Air again recirculates through the server chamber

In this article, we will learn the following hallmarks

- Introduction of Data Centre Cooling

- Component of Data Centre Cooling

- Room Air Conditioning Units (RAC) for Data Centre

- Scope of CFD Modelling

- How to create a 3D Model of Data Centre

- CFD Input for Data Centre

- Case study with CFD Results

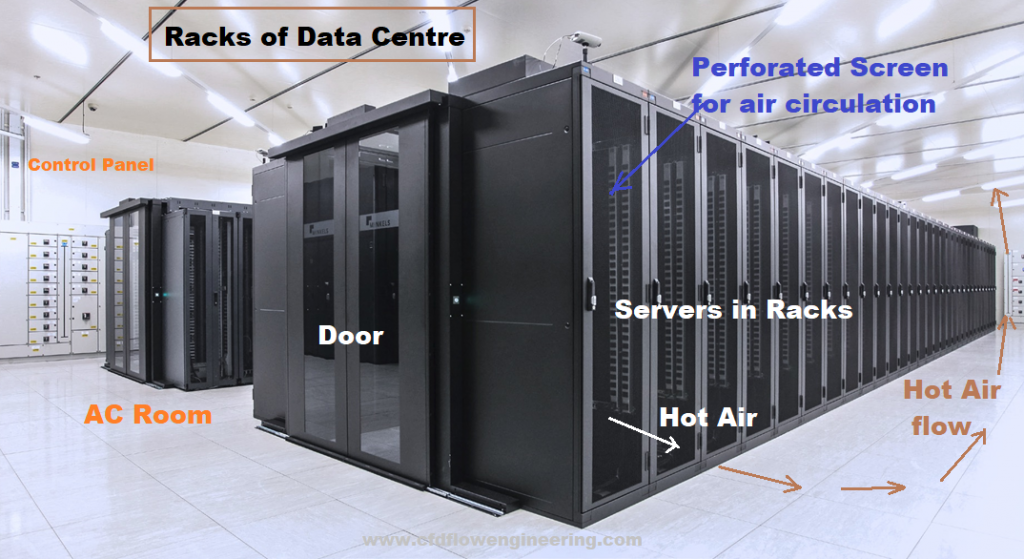

Server room of Data Center

Server room of Data Center

• Data center cooling is exactly what it sounds like: controlling the temperature inside data centers to reduce heat

• Failing to manage the heat and airflow within a data center can have disastrous effects on a business

• Not only is energy efficiency seriously reduced with lots of resources spent on keeping the temperature down but the risk of servers overheating rises rapidly

• The cooling system in a modern data center governs several parameters for better convective cooling with maximum efficiency.

• The following parameters are key:

- Temperatures (T)

- Heat Removal rate in watts (Q1)

- Power consumption by AC units in watts (E)

- Cooling Efficiency (Q1/E)

- Cooling fluid flow characteristics

- Design of Server racks

• All components of the data center cooling system are interconnected to keep higher heat removal

• Irrespective of the type of data center, continuous cooling is necessary for smooth functioning without shutting down operations

• Perforated tiles are kept between two rows of racks for better cooling efficiency

Importance of Data Centre Cooling

• The entire AC room is kept around 20 degrees Celsius. If the cooling system fails, there the rack temperature may increase more than 50°C

• Raised the temperature of their data centers can be around 27°C (80° Fahrenheit)

• A poorly designed cooling system could deliver the wrong type of server cooling to your center This, again, would lead to serious overheating, a risk that no business should accept willingly

- Irregular shutdown of the server

- Overheating of electronic items due to hot spots during high-load

- Melting of insulation and disconnection of data cables

- Burning and damage to rooms

- Fire propagation of the control room

- Overview of data cooling

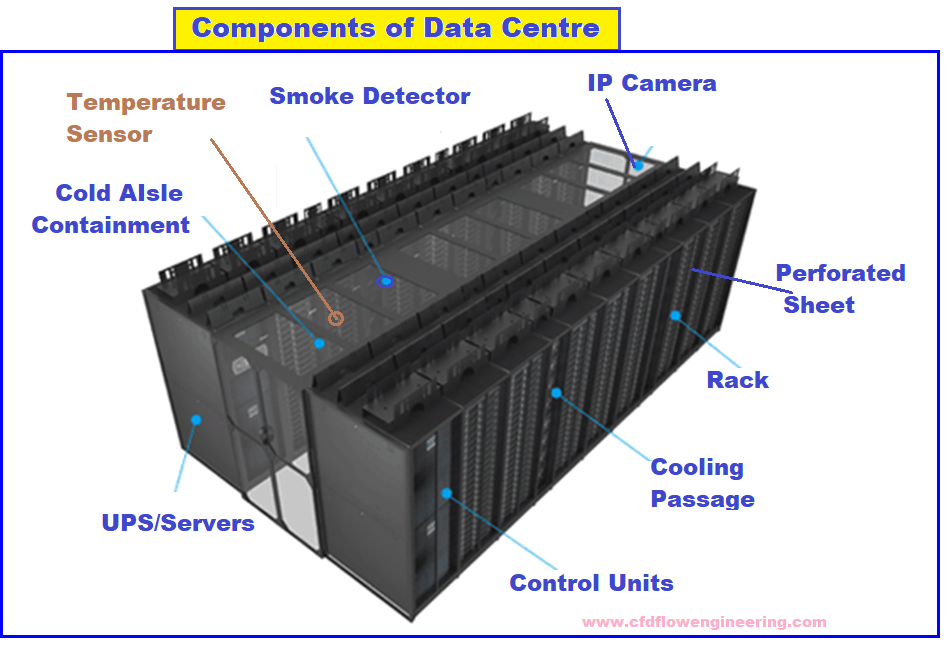

Major Components of Data Centre Cooling

The data center consists of following major parts

- UPS/ Data server racks

- Cold Aisle containment

- Monitoring sensors

- Smoke Detector

- Temperature sensor:

- Temperature sensors

- IP camera

- Control Panel

Types of Data Centre Cooling

• Data center cooling systems are critical for maintaining optimal operating conditions and preventing equipment overheating in data centers.

• Here are some key points about data center cooling systems:

- Air-Cooled Systems: Use air to remove heat from the data center. This can be done through raised floors, ceiling vents, or dedicated cooling units.

- Liquid-Cooled Systems: Utilize water or other liquids to remove heat. This can be more efficient than air cooling but requires specialized infrastructure.

Components of Cooling Systems:

• Air Handlers: Distribute cooled air throughout the data center.

• Chillers: Refrigeration units that cool water or liquid coolant in liquid-cooled systems.

• Cooling Towers: Expel heat from liquid coolant by evaporating water.

• CRAC/CRAH Units: Computer Room Air Conditioner (CRAC) or Computer Room Air Handler (CRAH) units are commonly used in air-cooled systems to control temperature and humidity.

Challenges and Considerations

• Energy Efficiency: Data centers consume a significant amount of energy for cooling. Efficient cooling designs and technologies (like free cooling using outside air) can reduce energy consumption.

• Scalability: Cooling systems must be scalable to accommodate growing data center infrastructure without compromising efficiency.

• Redundancy: Redundant cooling systems are essential to ensure continuous operation in case of equipment failure or maintenance.

Advanced Cooling Technologies

• Liquid Immersion Cooling: Submerges servers in a non-conductive liquid to directly dissipate heat, offering high cooling efficiency.

• Hot Aisle/Cold Aisle Containment: Organizes server racks to optimize airflow and reduce hot spots, improving cooling efficiency.

• Variable-Speed Fans and Pumps: Adjust fan and pump speeds based on cooling demand, improving energy efficiency.

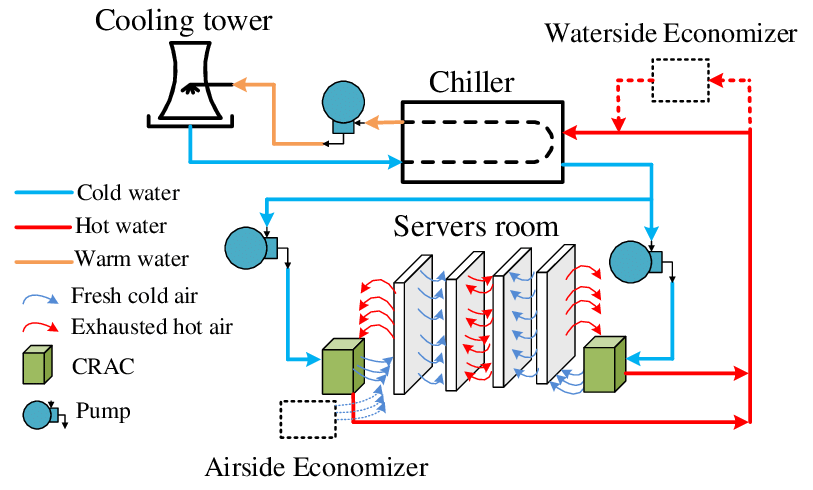

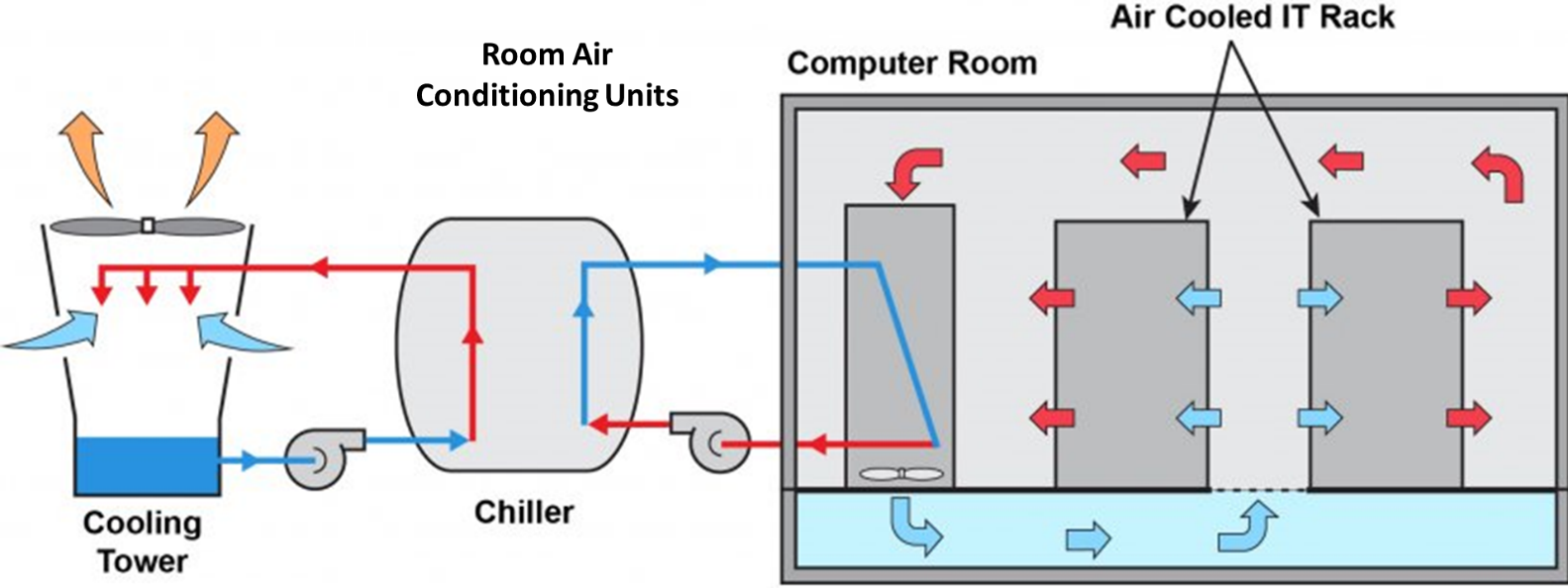

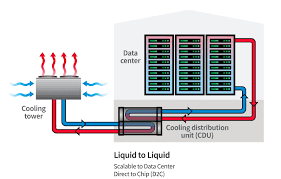

Chiller Water Cooling of Data Center

• Chilled water is commonly used for medium and large-sized data centers.

• There is an exchange of heat energy between hot and cold water in the chiller unit.

• In the cooling tower, the warm water is further cooled via natural circulation.

• The room Air conditioning (RAC) unit is used to cool down the heated air from the server

• Air is cooled by blowing over a cooling coil which is connected to a chiller

• Heat absorbed air is transferred to water coolant in the

Chiller water Cooling of Data Center – Water flow circuits

• Schematic of the Data Center cooling system is shown below

Data Center cooling system with Chiller

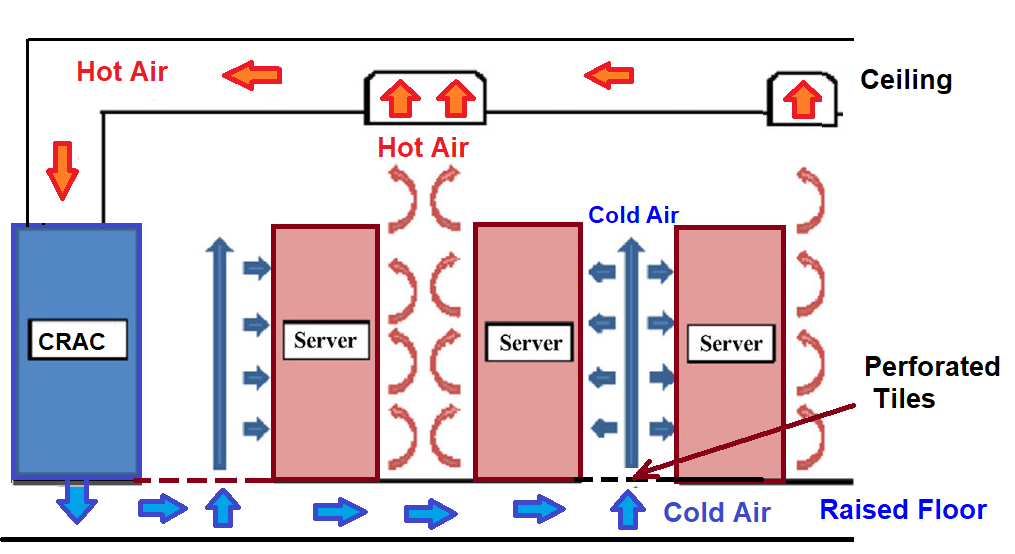

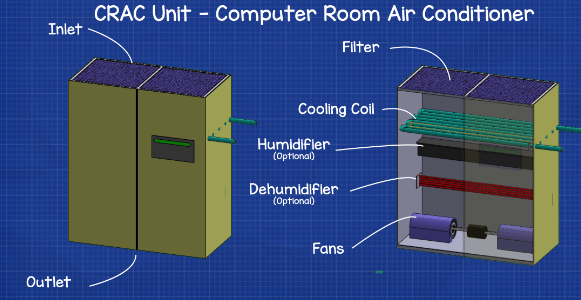

Computer Room Air Conditioner (CRAC)

• This is one of the popular and low-cost cooling techniques for small and medium data centers

• CRAC units are quite similar to conventional room air conditioners.

• These are powered by a sealed compressor which draws hot air across a refrigerant-filled cooling unit. Cold air is thrown into the room.

• They are quite inefficient concerning energy usage, however, the equipment itself is comparatively inexpensive

Computer Room Air Conditioner (CRAC) for Data centre

• The flow pattern in the Data Centre room with Computerized Refrigeration and Air conditioning (CRAC) is shown below

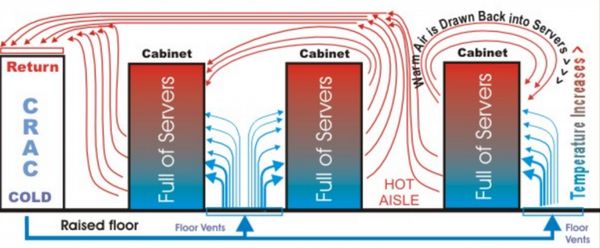

Alternate Cold and Hot Aisle Containment

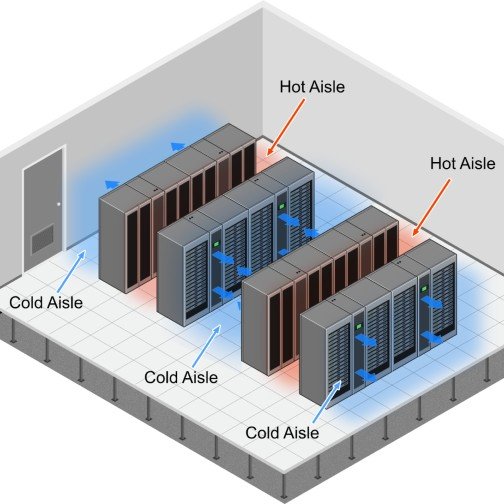

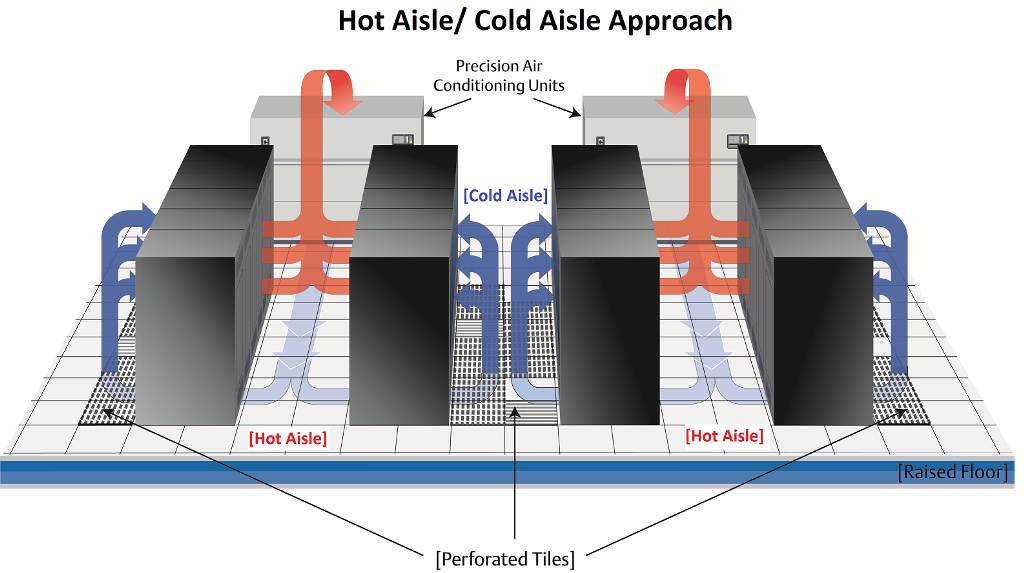

• Alternate cold and hot aisle containment are widely used for rack deployment. A cold aisle means a cold chamber of cold inlet air passage is formed and heated air exhausted from the rear of the rack flows into the hot aisle containment

• Hot aisles push hot air into the air conditioning (CRAC) intakes to be cooled and then it is vented into cold aisles. Empty racks are covered with full of blanking panels to avoid overheating or wasted cold air.

• Data Centre is placed at a certain height above the floor to keep the smooth circulation of air

Data Center Cooling Trends: Room, Row and Rack Cooling

• The bottom portion of the cold aisle consists of perforated tile for uniform entry of cold air

Alternate Cold and Hot Aisle Containment- airflow management in data centers

Liquid Cooling Technologies

• Liquid cooling provides faster and higher cooling compared to air cooling systems. However, the system should be leakproof to avoid liquid flow over the data center

• Two common liquid cooling methods are full immersion cooling and direct-to-chip cooling.

Liquid Cooling Solutions of data centre

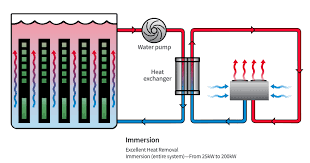

Immersion Cooling with Dielectric liquid

• The hardware of the Data Center is immersed in a tub of non-conductive, non-flammable dielectric liquid coolant

• Both the fluid and the hardware should be contained within a leak-proof case

• The dielectric fluid absorbs heat much faster than air cooling. This fluid turns to vapor and condenses and falls back into the fluid by a cooling tower

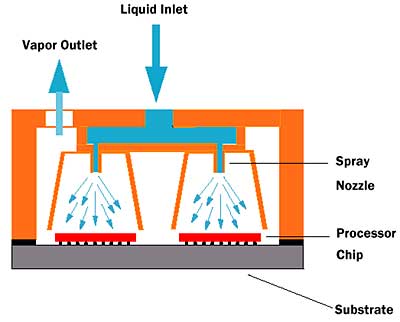

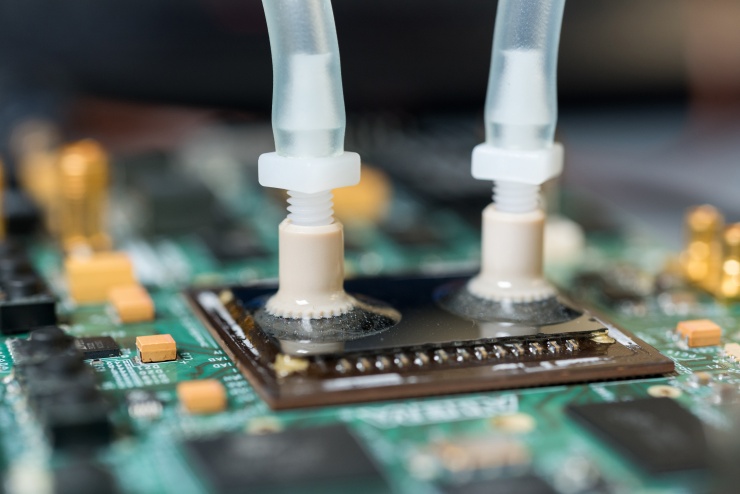

Direct to Chip Cooling

• Liquid coolant directly flows into a cold plate that sits atop a motherboard’s chips to extract heat

• The extracted heat is subsequently transferred to some chilled-water loop to be transported back to the facility’s cooling plant and expelled into the outside atmosphere

• Both methods provide far more efficient cooling solutions for power-hungry data center deployments.

• The liquid pipe is placed at the top of the chips to cool faster. It reduced space and maintenance costs. This method is effective for small data centers.

• Some high-end processors of high-performing computing machines (HPC) are liquid-cooled to remove heat at rate

Liquid Cooling Solutions of data centre

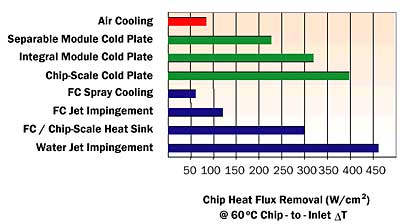

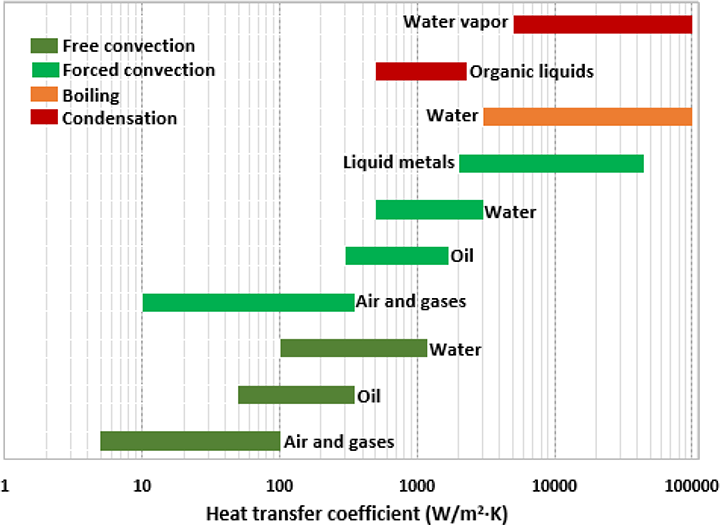

Which cooling method is more effective for the data center?

• Various cooling methods have been developed to cool data centers or chips

• Among all techniques, jet impingement provides a higher heat removal rate from the chips

• Liquid cooling methods are more effective than air cooling but their maintenance is not easy

• Two-phase (water vapor cooling) has high transfer coefficients compared to air and oil

Design of Data Centre Cooling

Precision Air Conditioning (PAC)

• Precision Air Conditioning (PAC) systems are dedicated ly designed for cooling data center and server room environments instead of that designed for general buildings (homes, commercial offices, and halls)

• Precision Air Conditioning systems can provide superior design and reliability and have a high ratio of the sensible-to-total cooling capacity (COP)

• Precision Air Conditioning (PAC) system controls the environment, providing constant temperature and humidity conditions for sophisticated, expensive, and sensitive electronic equipment throughout the year

Design of Precision Air Conditioners (PAC)

Precise Temperature control

• Inlet air temperature of cold air is kept between 10 to 15 °C

• The maximum temperature should not exceed 50°C.

• The cooling temperature can be decreased if the flow rate of air is limited

Precise humidity control

• Electronic devices require a steady level of humidity for proper functioning

• Both high or low humidity levels can affect the performance if PAC units for the long run

Conscious Cooling

• Room air conditioners are generally designed to be used for summers (3 Star, 1800 hrs)

• PACs are designed for 365 days

Manage High Levels of Sensible heat

• Room ACs are designed to manage latent heat (heat with humidity, emanated by people

• PAC units designed to heat without humidity generated by machines

Better Air Distribution

• They handle higher mass flow rates that move more air volume at higher speeds compared to standard ACs

• fewer airborne particles can be carried out due to the AC

• Precision air conditioners handle the higher cooling load and AC load densities per each unit

• Automatically controlling for increase or decrease is possible for efficient cooling

Computer Room Air Conditioning (CRAC) Units

• A CRAC unit is similar to traditional air conditioning

• It is designed to maintain the temperature, air distribution, and humidity in a data center’s computer rooms. The CRAC unit can use a direct expansion refrigeration cycle

• It is used to pressurize the spaces below the floors

• The parts of CRAC units are shown below

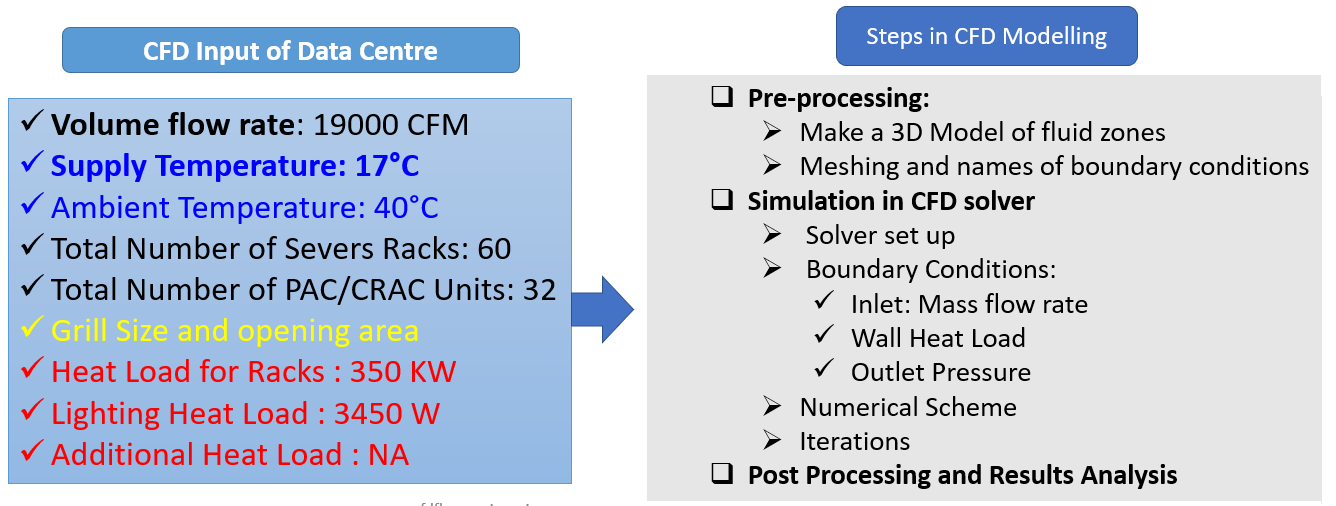

Scope of CFD Modelings for Data Centre

• Number of Racks required in a room with RAC units

• Assess the performance of PAC/CRAC Units

• Effect of Supply Temperature

• Influence of Surrounding Ambient Conditions during extreme weather

• To find out the hottest /critical server which is prone to fire

• Design optimization of Data Centre

CFD Input and Steps in Modelings

• CFD users can create geometry and mesh as per the dimensions of server rooms and racks

• After the creation of the CFD model, Major CFD Input is the mass flow rate of air and temperature, and heat load to the server

• The basic steps of CFD modeling are the same as any CFD problem

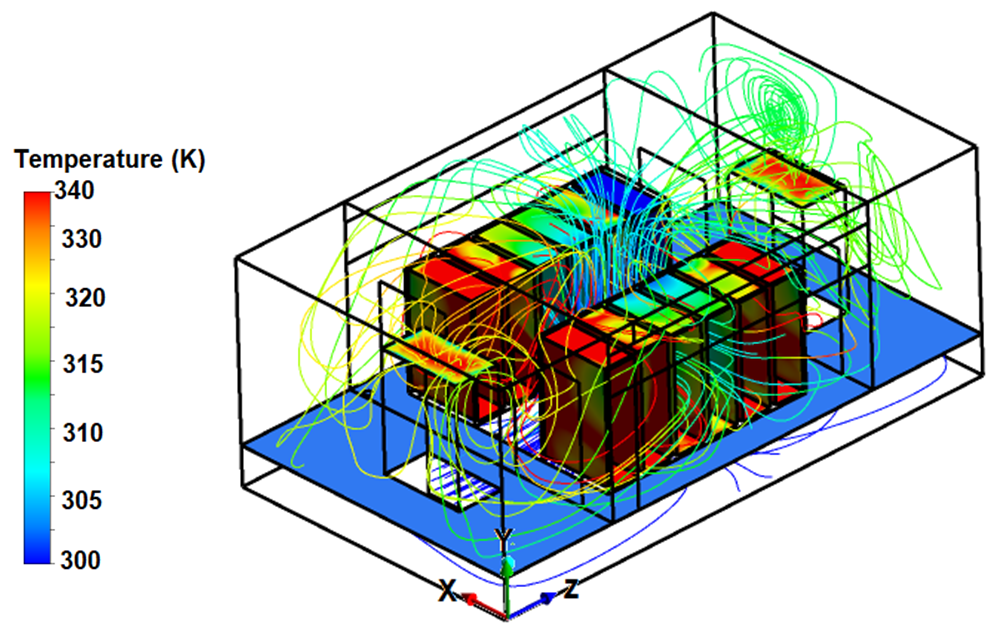

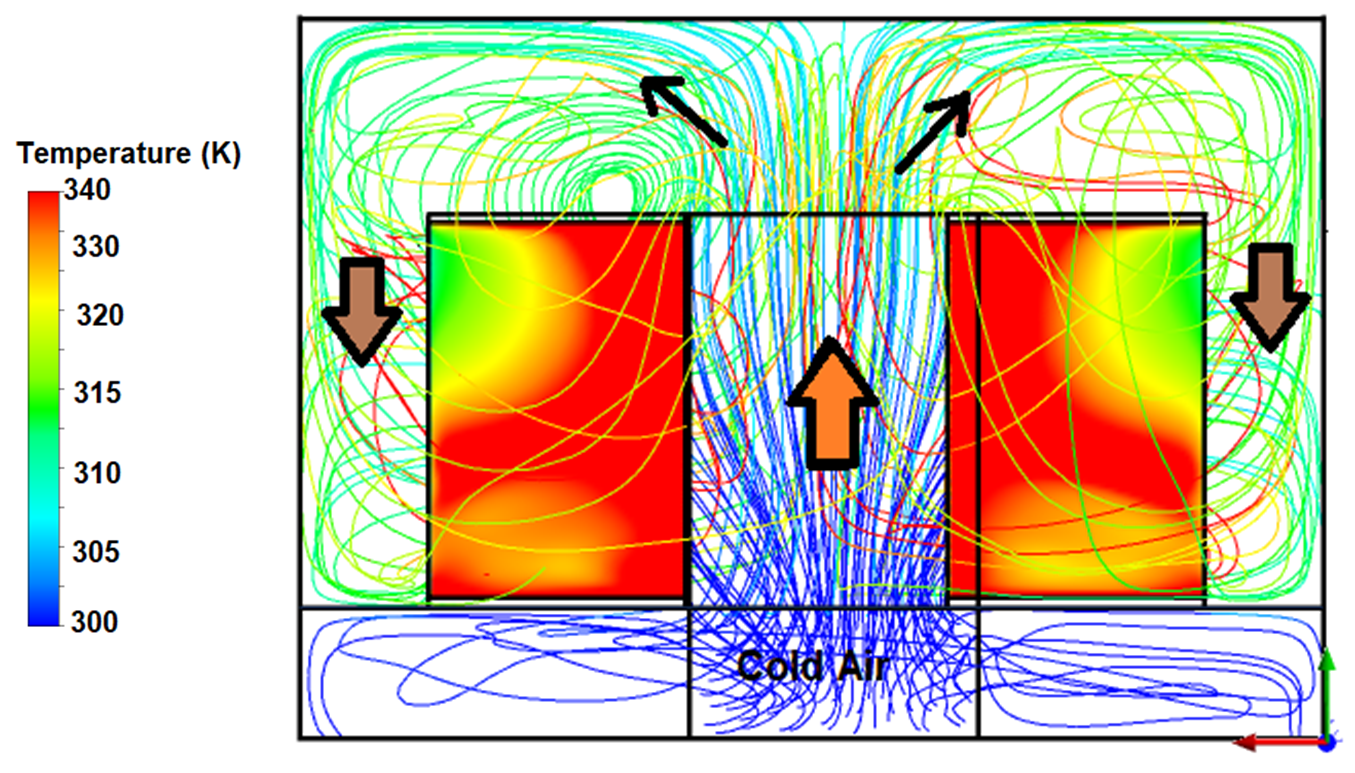

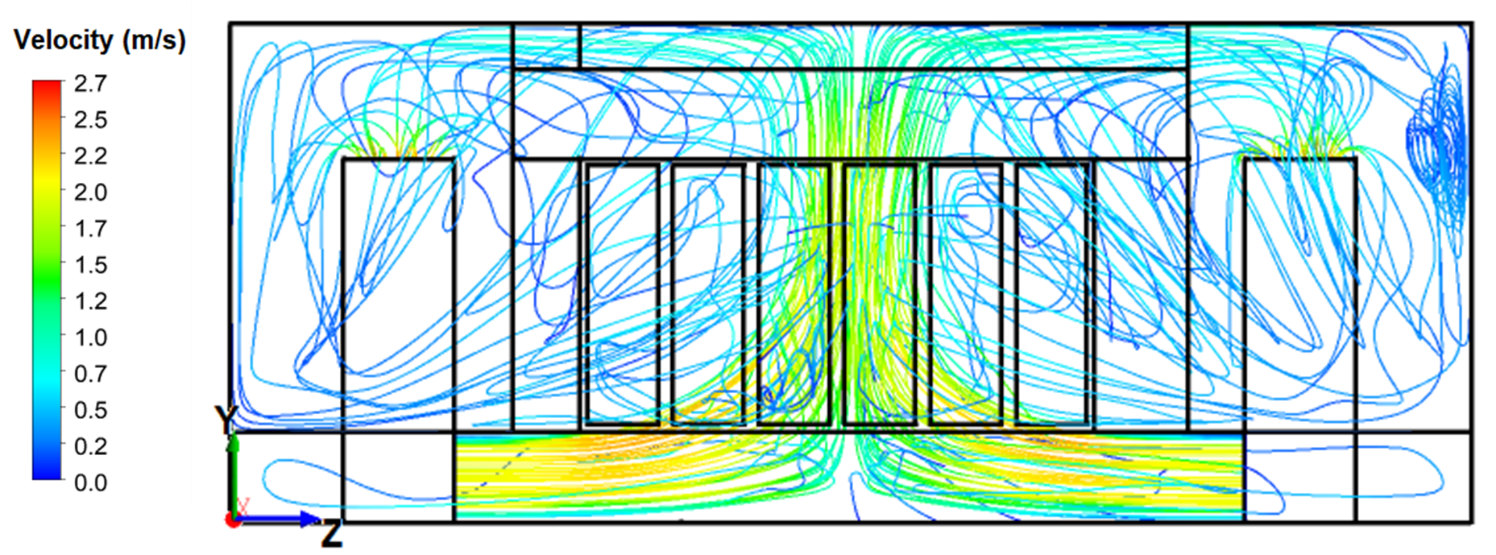

Case Study 1: Data Center with Two Racks

• A simple data Centre of 12 servers was simulated using a CFD solver (ANSYS FLUENT)

• Cold air flows entre through the Centre of racks

• Temperature contours across the data server room are shown below. Temperature is very high in server rooms.

• The heat removal rate can be increased by increasing the velocity of air

• It is observed that air velocity is maximum in the Center

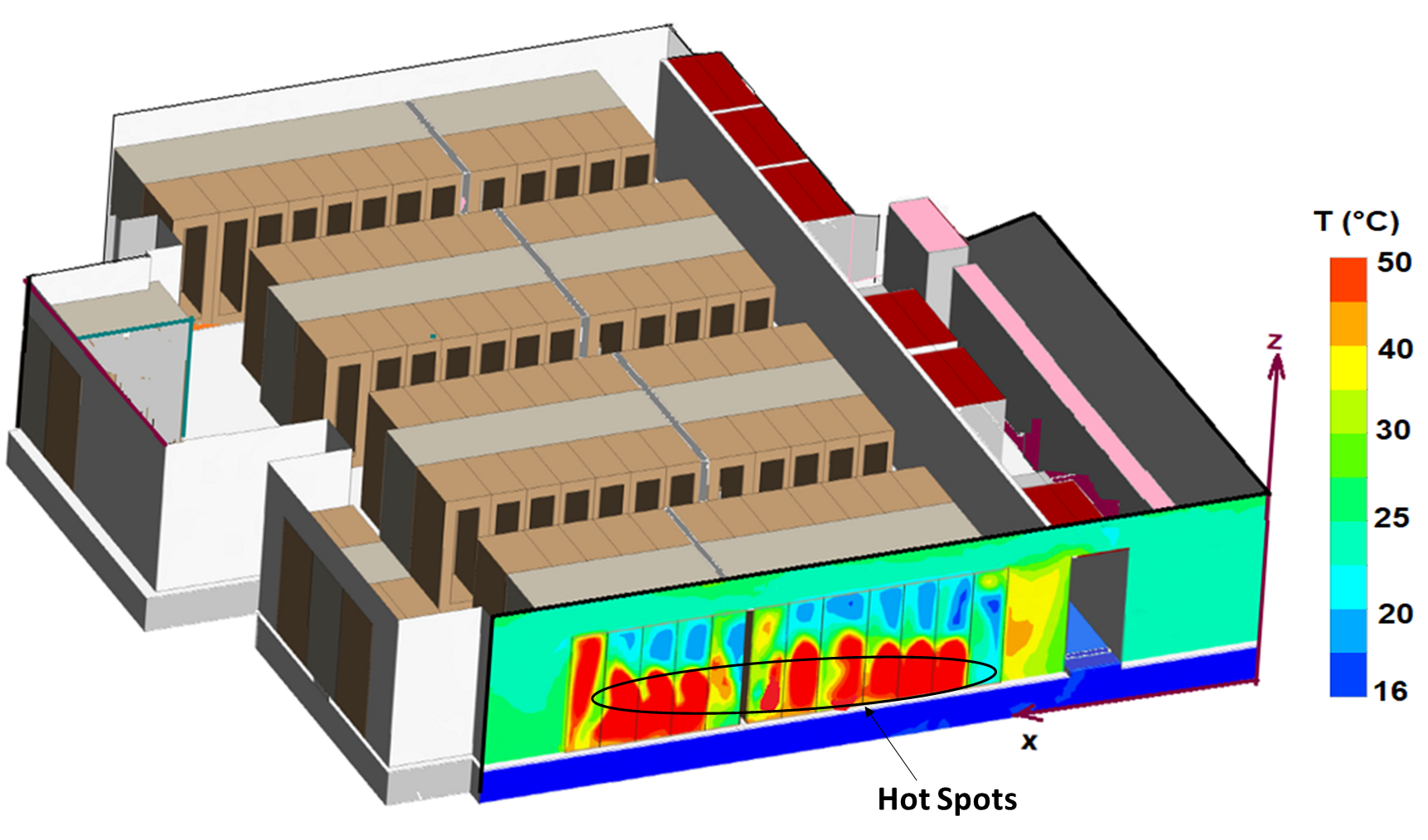

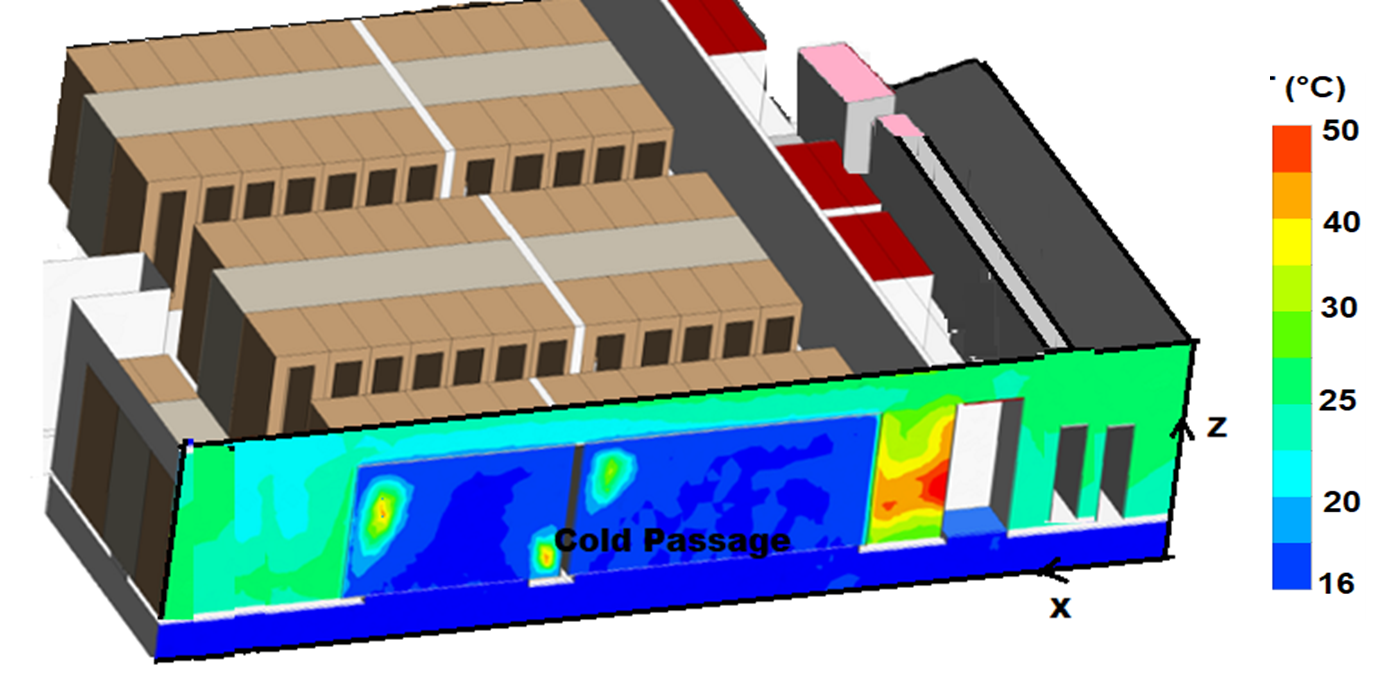

Case Study 2: Temperature Distribution in Data Centre

• Temperature contours in heater server racks through which cold air gets heated by absorbing heats

• Maintaining the right temperature in a data center is crucial for the reliable operation of IT equipment. Here are some key points regarding temperature management in data centers:

Recommended Temperature Range:

• The American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) recommends a temperature range of 18-27°C (64-81°F) for data centers.

• ASHRAE also suggests a humidity range of 40-60% to prevent static electricity buildup and minimize the risk of equipment failure.

1. Hot Aisle/Cold Aisle Containment:

• Organizing server racks into hot aisles (where hot air is expelled from servers) and cold aisles (where cool air is supplied) can improve cooling efficiency.

• Using containment systems like aisle-end doors and roof panels can further enhance airflow management and temperature control.

2. Cooling Strategies:

• Air-Cooled Systems: Use air conditioning units, computer room air conditioners (CRAC), or computer room air handlers (CRAH) to cool the air in the data center.

• Liquid-Cooled Systems: Employ chilled water or liquid coolant systems to remove heat from servers and other equipment.

3. Monitoring and Management:

• Continuous temperature monitoring using sensors throughout the data center helps maintain optimal conditions and alerts staff to potential cooling issues.

• Automated systems can adjust cooling settings based on real-time data and cooling demands, improving efficiency and reliability.

4. Energy Efficiency Considerations:

• Efficient cooling strategies, such as free cooling using outside air when ambient conditions allow, can reduce energy consumption and operating costs.

• Using energy-efficient cooling equipment, like variable-speed fans and pumps, can also contribute to overall energy savings.

5. Emergency Cooling Plans:

• Data centers should have contingency plans for cooling failures, such as backup cooling systems or emergency protocols to shut down non-essential equipment to prevent overheating.

6. Environmental Impact:

• Optimizing cooling systems for energy efficiency not only reduces operational costs but also minimizes the environmental impact of data centers by lowering carbon emissions.

CFD Results of Data Center: Temperature Distribution

• Temperature Contours in Cold Passage through which cold air flows between two opposite racks

CFD Results of Data Center: Temperature Distribution

Summary

• Cooling of Data Centre is necessary to avoid shut down operations due to overheating of data servers or UPS

• Data Centre cooling is continuously carried out using cold air and comprises refrigeration and air conditioning units (CRAC)

• The Data Centre is also connected to another water cooling circuit for improved cooling efficiency

• CFD modeling helps to determine hot spots in the data center. CFD results show high velocity in the center of racks and high temperature at the end of racks

• Overall, maintaining a stable and appropriate temperature in data centers is essential for ensuring equipment reliability, minimizing downtime, and optimizing energy usage.

About the Author:

Dr. Sharad Pachpute (PhD.)

CFD Engineer,

Zeeco, Inc.

Dr. Sharad Pachpute is working as a CFD engineer at Zeeco Inc.,

Dr. Sharad Pachpute work elements include CFD combustion modeling of fuel equipment systems, emissions controls, low NOx burners, and AIG systems.

Before joining Zeeco Inc. Dr. Sharad Pachpute has worked in Babcock Power, AGFIS, NTPC, and Altran Technologies India Pvt. Ltd (Capgemini Engineering Services).

Dr. Sharad Pachpute received a B.E. in Mechanical Engineering from Pune University with distinction in 2008.

Dr. Sharad Pachpute has completed M.Tech. and Ph.D. from IIT Delhi.

Dr. Sharad Pachpute current research areas are : Combustion and Pollution Modeling, Development of Low NOx burner, Heat Transfer, Turbulent flow modeling, Multi-phase Flows, CFD modeling of complex problems (moving zones and turbomachinery).

Dr. Sharad Pachpute can be Contacted at :