HOW DATA CENTERS IN INDIA ARE PREPPING TO SUPPORT

SERVERLESS COMPUTING FOR AI & ML TECHNOLOGIES

In recent years, serverless architectures have revolutionized the way applications are built and deployed, freeing developers from the burden of managing infrastructure. This paradigm shift has opened up exciting opportunities for the development of artificial intelligence (AI) and machine learning (ML) applications. In this blog post, we will explore how serverless architectures are paving the way for a new era of AI and ML applications, discussing the key benefits they offer. We will also touch upon the major changes brought about by AI in various industries.

Serverless Computing: A Brief Overview:

At its core, serverless computing is an execution model where cloud providers dynamically manage the allocation of resources to run applications. Developers can focus solely on writing code and deploying it to a serverless platform, which takes care of scaling, patching, and other operational tasks. This approach is particularly advantageous for AI and ML applications, allowing developers to concentrate on building and refining their models, rather than dealing with underlying infrastructure complexities with serverless computing,

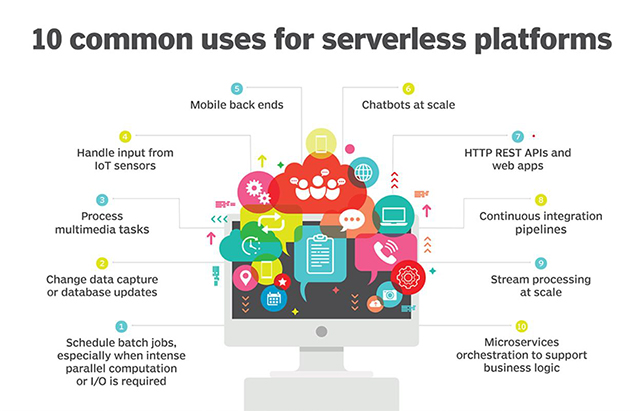

Serverless Architecture / Serverless Computing:

Serverless computing, also known as Function as a Service (FaaS), is an execution model in cloud computing where developers can build and run applications without the need to manage or provision the underlying infrastructure. In a serverless architecture, developers write code in the form of individual functions that perform specific tasks or operations. These functions are deployed to a serverless platform, such as AWS Lambda, Azure Functions, or Google Cloud Functions, which dynamically manages the allocation of resources required to run the functions.

The term “serverless” does not imply the absence of servers altogether but rather refers to the fact that developers do not need to explicitly provision, manage, or scale servers. The cloud provider or the DataCenter Intergrator handles the infrastructure aspects, all routine infrastructure management and maintenance, including updating the operating system (OS), applying patches, managing security, system monitoring and planning capacity. Many cloud platforms provide serverless computing, the popular ones being AWS Lambda, Microsoft Azure Functions, and also Cloud Functions from Google and Oracle.

Benefits of Serverless Architectures for AI and ML Applications:

• Scalability on-demand: Traditional infrastructure models often require developers to provision resources in advance, which can be time-consuming and costly. Serverless architectures provide automatic resource allocation, enabling applications to scale seamlessly in response to fluctuating demands. This scalability is crucial for AI and ML applications, which often require substantial computational power to process and analyze large volumes of data.

• Integration of pre-built services and tools: Cloud providers offer a wide range of AI and ML services that can be readily integrated into serverless applications. These services include natural language processing, image recognition, and speech-to-text conversion, among others. By leveraging these pre-built tools, developers can swiftly develop sophisticated AI and ML applications without the need to develop and maintain complex algorithms and models.

• Cost efficiency: Traditional infrastructure models often involve paying for resources even when they are idle, resulting in wasted spending. In contrast, serverless platforms charge based on actual usage, ensuring that developers only pay for the resources their applications consume. This cost efficiency is especially valuable for AI and ML applications that require bursts of processing power for short durations.

• Accelerated development and deployment: Serverless architectures abstract away the underlying infrastructure, allowing developers to focus on code development and model refinement. By minimizing time spent on operational tasks, serverless platforms facilitate faster development cycles and more rapid innovation. Developers can iterate and experiment quickly, driving advancements in AI and ML applications.

Significant Transformations Enacted by Artificial Intelligence: The emergence of AI has brought about significant transformations across various industries:

• Healthcare: AI is revolutionizing healthcare by improving disease diagnosis, drug discovery, and personalized medicine. Machine learning algorithms can analyze vast amounts of medical data to identify patterns, predict outcomes, and enhance patient care.

• FinTech: AI-powered algorithms enable intelligent fraud detection, risk assessment, and algorithmic trading. Natural language processing facilitates sentiment analysis and customer support, while chatbots enhance customer experiences.

• Manufacturing: AI and ML technologies optimize production processes, predictive maintenance, and quality control. Intelligent robotics systems enhance automation, improving productivity and reducing errors.

• Transportation: AI enables autonomous vehicles, optimizing traffic management and enhancing safety. Machine learning algorithms analyze traffic patterns and predict maintenance needs, improving efficiency and reducing costs.

• Retail at Scale: AI powers personalized recommendations, demand forecasting, and inventory management. Chatbots and virtual assistants enhance customer interactions, providing personalized shopping experiences.

• E-Commerce: AI algorithms analyze customer behaviour, perferences, and historical data to provide personalized product recommendations. This enhances the shopping experience by showing users relevant items, increasing the likelihood of conversion.

The advantages of serverless computing include the following:

• Cost-effectiveness. Users and developers pay only for the time when code runs on a serverless compute platform. They don’t pay for idle virtual machines (VMs).

• Easy deployment. Developers can deploy apps in hours or days rather than weeks or months.

• Autoscaling. Cloud providers handle scaling up or spinning down resources or instances when the code isn’t running.

• Increased developer productivity. Developers can spend most of their time writing and developing apps instead of dealing with servers and runtimes.

The disadvantages of serverless computing include the following:

• Vendor lock-in. Switching cloud providers might be difficult because the way serverless services are delivered can vary from one vendor to another.

• Inefficient for long-running apps. Sometimes using long-running tasks can cost much more than running a workload on a VM or dedicated server.

• Latency. There’s a delay in the time it takes for a scalable serverless platform to handle a function for the first time, often known as a cold start.

Is serverless possible in a private-cloud infrastructure?

Until now, when we talk about Serverless applications, we assume we are using a service like AWS Lambda or Google Cloud Functions. We pass the infrastructure management responsibility to whichever provider we choose.

However, some companies will want to manage their cloud infrastructure themselves. Whether it is for compliance to certain regulations, or just because they already have infrastructure they prefer to use, there is a business case out there for companies who might want to take the advantages of a Serverless model, while managing the server at the same time.

The value of serverless in a private cloud

Good software companies are constantly looking for ways to have their developers concentrate in building applications which brings value to their clients quicker and easier. Cost optimization and ease of maintenance is the biggest driver.

However, now adays developers need to think about a lot of extra concerns in their applications besides business-based requirements: How those applications will scale, memory management, implementation of security protocols, infrastructure specs like RAM, disk space, etc. which in its own is a whole new world for the developers to dive into.

Container platforms like Docker and PaaS (Platform as a Service) in general have contributed a lot lately to abstract those concerns and making them usable by software developers: They provide small, self-contained, write-once-and-use-everywhere, pieces of infrastructure which remain constant across all your environments.

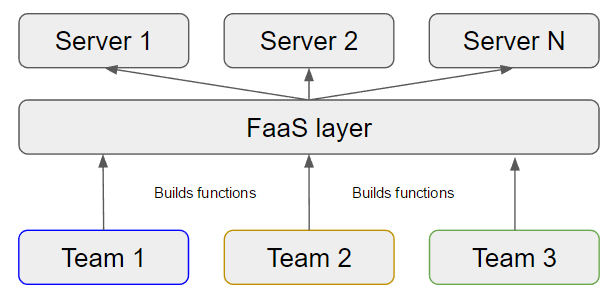

Serverless (or Function as a Service in this context) is the next logical step in this evolution. It’s not about removing the server side out of the equation, but taking it away the mind of developers and put it in the hands of more specialized DevOps teams.

What’s the value of FaaS that PaaS doesn’t provide already?

Both PaaS and FaaS have what might look like overlapping concerns:

Both abstract the infrastructure away of developers, allowing them to concentrate in coding. PaaS is based in deploying full applications, while FaaS deploys only functions.

Some of the differences are:

• FaaS allows developers to prototype faster. Without having to think about full (both legacy and new) projects, developers can focus on build business value from day one.

• FaaS gives developers the freedom to use multiple languages. Does one part of the application make more sense to be written in Scala while another would be easier to be implemented in Golang FaaS makes this possible without having multiple applications.

Obviously, taking advantage of serverless features doesn’t prevent you from adopting other solutions like PaaS at the same time. It’s not all-or-nothing. It’s just another tool in the toolbox companies can give to their developers to make them more productive.

You can have multiple developer’s teams share a FaaS layer, which internally know how many physical or virtual nodes it needs, and manages replication and communication. All those teams do is write a set of functions which are focused in bringing value to their clients.

This looks a lot like the infrastructure provided by PaaS platforms like Cloud Foundry. And in a way, it could work in the same way, with the difference that the developers focus in building functions instead of full applications.

Also, nothing prevents you from merging those functions into full projects in the future and deploy them through PaaS.

FaaS allows us to shift the process in which we build applications, by creating value first and leaving the work of structuring your project for later. Later in the process, you can have enough information about your business logic to make more informed decisions about how your project should be structured (for example, should you go domain-driven or MVC?).

Serverless Functions Solutions for the Data Center

Deploying serverless functions in your own data center (or preferably a colocation data center) is not much more complicated than running them in the public cloud.

There are a number of reasons you may want to run serverless functions in your own data center. One is cost. Public cloud vendors charge you each time a serverless function executes, so you have a continuous ongoing expense when you use their services. If you run functions on your own hardware, most of your investment occurs upfront, when you set up the serverless environment. There is no direct cost for each function execution. Your total cost of ownership over the long term may end up being lower than it would be for an equivalent service in a public cloud.

Security is another consideration. By keeping serverless functions in your data center, you can keep all of your data and application code out of the cloud, which could help avoid certain security and compliance challenges.

Performance, too, may be better in certain situations for serverless functions that run in your own data center. For example, if the functions need to access data that is stored in your data center, running the functions in the same data center would eliminate the network bottlenecks you may face if your functions ran in the cloud but had to send or receive data from a private facility.

A final key reason to consider serverless solutions other than those available in the public cloud is that the latter services offer native support only for functions written in certain languages. Functions developed with other languages can typically be executed, but only by using wrappers, which create a performance hit. When you deploy your own serverless solution, you have a greater ability to configure how it operates and which languages it will support.

That said, the various serverless frameworks that are available for data centers have their own limitations in this respect, so you should evaluate which languages and packaging formats they support before selecting an option.

There are two main approaches to setting up a serverless architecture outside the public cloud.

The first is to run a private cloud within the data center, then deploy a serverless framework on top of it. In an OpenStack cloud, you can do this using Qinling. Kubernetes (which is not exactly a private cloud framework but is similar in that it lets you consolidate a pool of servers into a single software environment) supports Knative, Kubeless, and OpenWhisk, among other serverless frameworks.

The second approach is to use a hybrid cloud framework that allows you to run a public cloud vendor’s serverless framework in your own data center. Azure Stack, Microsoft’s hybrid cloud solution, supports the Azure serverless platform, and Google Anthos has a serverless integration via Cloud Run. (As for Amazon’s cloud, AWS Outposts, its hybrid cloud framework, does not currently offer a serverless option.)

The first approach will require more effort to set up, but it yields greater control over which serverless framework you use and how it’s configured. It may also better position you to achieve lower costs, because many of the serverless solutions for private clouds are open source and free to use.

On the other hand, the second approach, using a hybrid cloud solution from a public cloud vendor, will be simpler to deploy for most teams, because it does not require setting up a private cloud. It also offers the advantage of being able to deploy the same serverless functions in your data center or directly in the public cloud. A serverless function deployed via Azure Stack can be lifted and shifted with minimal effort to run on Azure Functions.

Conclusion:

The global serverless computing market is expected to increase by more than 23.17% between 2021 and 2026, according to a report from Mordor Intelligence.

“Advancements in computing technology are enabling organizations to incorporate a serverless environment, thereby augmenting the market,” the report said. “The benefits of Serverless Computing such as unconditional development and deployment, built-in scalability among others is playing an important role in supporting the rapid adoption of Serverless Computing thereby fueling the growth of the market.”

About the Author :

Ms. Mahima M. Dominic

Ms. Mahima M. Dominic

Lead-Strategy

AdaniConnex

Women in ICT Global Awards 2022 Runner-Up

Ms. Mahima M. Dominic is an Electronics & Communication Engineer, IT/ITES business enthusiast with an ardent flair to storytelling about IaaS, PaaS, DaaS, SaaS, MaaS, BaaS and XaaS ( hybrid cloud computing as service) into Enterprise, BFSI, PSU and Government business for India.

Ms. Mahima M. Dominic is a persuasive and motivated person with good probing and problem solving skills and the ability to come up with great ideas. Having a strong aspiration for success and have enthusiasm to work hard, effectively and efficiently. Dedicated, result-driven individual and can add value to any business by going that extra mile to deliver results.

Ms. Mahima M. Dominic believe, to build and to be a part of an organisation which is deeply committed to values, in the firm belief that success in business would be its unavoidable, eventual result. Undaunted commitment to values continues to remain at the core of her heart.

Solution Stitching, RFP/Bid Preparation, Concept Selling, Event Planning and Public Relations are the viable skills of Ms. Mahima M. Dominic

Ms. Mahima M. Dominic has excellent communication skills in English and Hindi along with adequate knowledge of Tamil, Marathi & French. She can meet and liaise with clients to discuss their technology journey and transformation.

Ms. Mahima M. Dominic is Bestowed with the following Licences & Certifications:

https://www.linkedin.com/in/ma

Ms. Mahima M. Dominic is Accorded with the following Honors & Awards :

https://www.linkedin.com/in/ma

Ms. Mahima M. Dominic can be contacted at :